Co-creator (2025)

Many creatives feel that AI is their enemy, but what if AI could be a co-creator instead?

Written in just a few days (from scratch) in C/C++, Co-creator combines procedural generation with AI generation and human input to create a real-time rendered solar system which can be freely explored by the user.

In the beginning...

...there was an empty cocreator.c file.

I had only about a week to work on this project and had to choose my tools carefully:

- C/C++ with Raylib for the main application

- Python for the interactions with AI models

- GLSL as the shader language.

- Local AI models with fast inference on limited hardware: Gemma2-9b for text and Stable Diffusion 1.5 for texture generation.

- ... copious amounts of caffeine

How does it work?

Creation of a solar system begins with human-written input where the user specifies the type of solar system that they imagine. An AI large-language model then interprets this request and fits it to the parameters of the procedural generation.

What does the AI do?

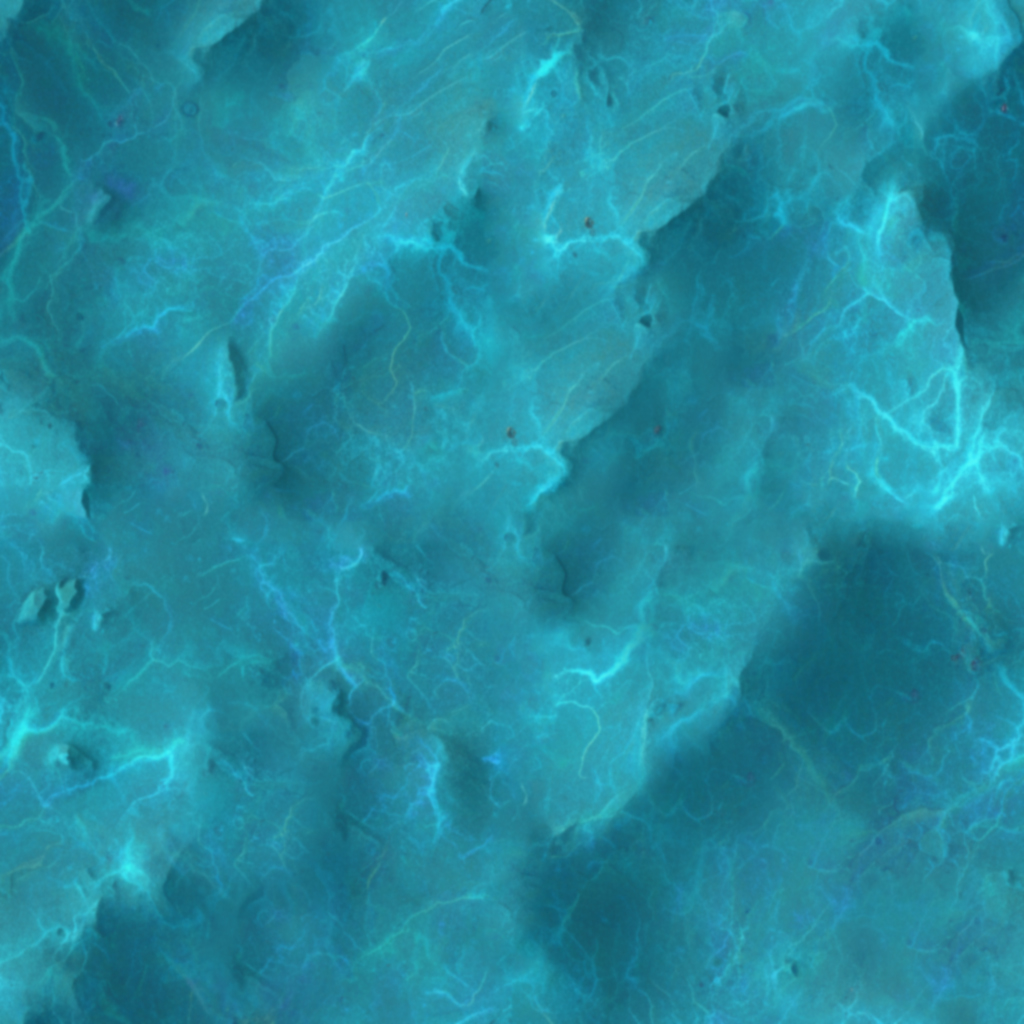

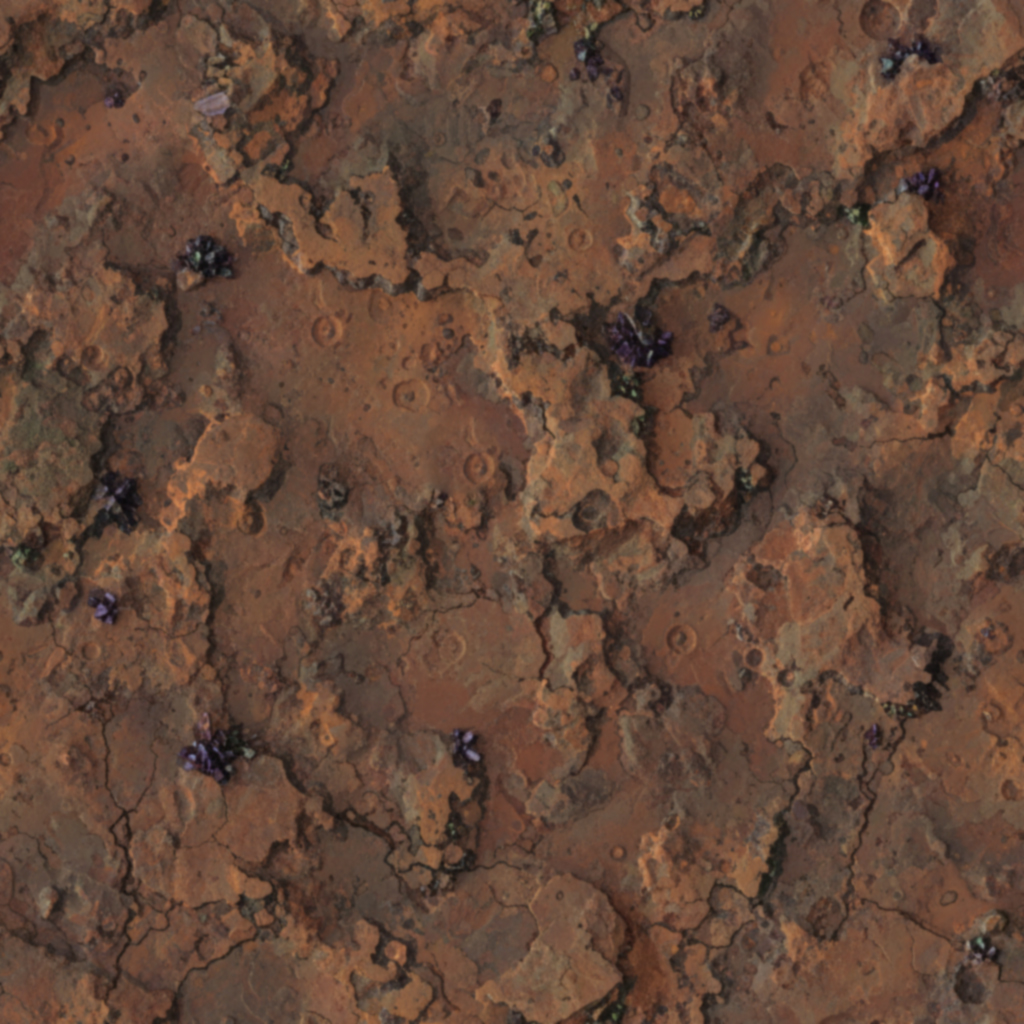

Alongside fitting the user description to the procedural generation parameters, the AI creates the textures for the planets, sun, and background using stable diffusion.

These textures then serve as inputs into the shaders that drive the visual appearance of the scene and add a huge amount of variety to the generation. Traditionally, textures are either hand-made in advance or procedurally generated through mathematical formulas. However, by letting an AI create the textures instead, co-creator can more accurately fullfull the user's request and is capable of creating far more varied and interesting scenes.

Challenges

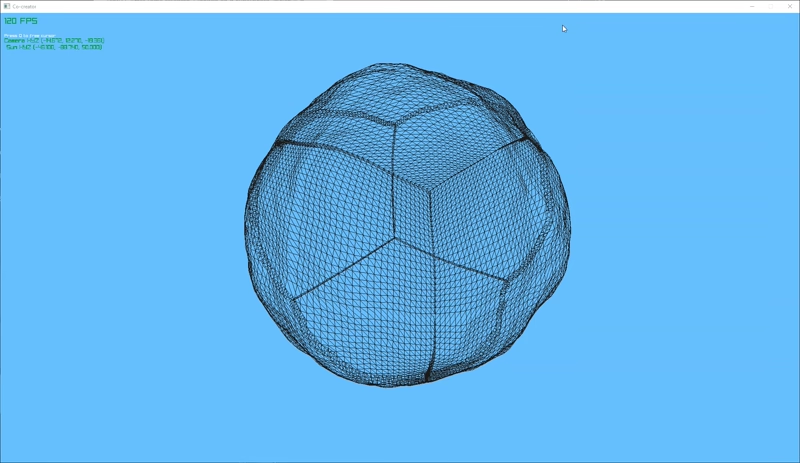

The first hurdle was picking the right geometry for the planets and star(s). After some research, I landed on a cubesphere. Which is a cube which has been inflated like a balloon to resemble a sphere.

Why not a "normal" sphere? While planets might look like simple spheres, in 3D rendering, the topology (geometry) of the sphere matters. Everything in 3D is made up out of triangles and a standard sphere has more of these around the poles than along the equator. Since our generated planets need to have landscape features, we need the geometry to be as equally distributed along the sphere as possible and that's what a cubesphere achieves.

Quadtree terrain tesselation

The second big hurdle was that the camera needs to be able to get up close to the planetary surface, meaning we need to generate more geometric detail on-the-fly. Modern GPUs can create this kind of detail with tessalation shaders, but these aren't readily supported by Raylib. Instead, I used a quadtree datastructure which automatically subdivides the terrain nearest to the camera:

Quadtree skirts

My quadtree solution also implements skirts. Skirts are extra bits of geometry along the edges of each chunk of terrain which reduce the amount of visible seams on the terrain. Without skirting, one bit of terrain with high geometric detail bordering terrain with low detail, creates holes and seams in the planet's surface.

Vision

I had far more ideas than I had time. Once a solar system is generated, there is currently no way to save it. It would be really cool if users could print out a QR code which contains all the settings and seeds of their generated solar system, making their creation transportable. A generated solar system could then be viewed using an augmented reality mobile app.

Further extending the idea of co-creation, I considered that if this was a real art installation, users would together be building a small universe. Each user adding their unique creation to a larger whole.

Some more mundane features I've been planning for are:

- A more novel interaction with the AI models, like through motion, distance, or heat sensors.

- Planets with atmospheres or rings

- Gas giants

- Moons for the planets!

- Nebulae

- Binary star systems and pulsars

I intended to create a more interesting form of user input. Rather than text, I had considered motion-tracking through OpenCV or with infrared sensors through an Arduino, but that proved a little too ambitious given the many other complex challenges in this project. Ultimately, I decided that it was more important to show the integration with AI models and focused on that rather than a novel user-input mechanism instead.